A blockchain experiment gives rise to a swarm of decentralized learning robots.

A team of scientists in Belgium may have made a significant breakthrough in the field of artificial intelligence (AI) by utilizing blockchain technology to enable decentralized training for autonomous AI agents. Their research aimed to address one of the most pressing challenges in AI: securing machines from both physical and cyber-attacks, while still maintaining their decentralized learning capabilities.

The use of blockchain in this context has the potential to revolutionize not only the development of AI but also how these systems function in the real world.

In the experiment, researchers simulated an environment where individual AI agents, operating autonomously, could learn and coordinate their actions. These agents were able to communicate with each other using blockchain technology, which served as a decentralized and secure platform for sharing information.

This created a "swarm" of AI learning models, where each agent contributed its learning outcomes to build a larger, more advanced AI system. The key advantage of this method was that the larger system benefited from the collective intelligence of the entire swarm without needing to access the data held by each individual agent. The decentralized nature of the blockchain ensured that data privacy and security were maintained throughout the process.

The approach used by the researchers is known as "decentralized federated learning." This method differs from traditional machine learning, where data is typically stored in centralized databases, making it vulnerable to breaches and limiting its application in privacy-sensitive fields. Decentralized federated learning, however, allows AI systems to learn and improve without compromising individual data sources.

This method is particularly suited for applications where continuous autonomous learning is required or where data privacy is critical.

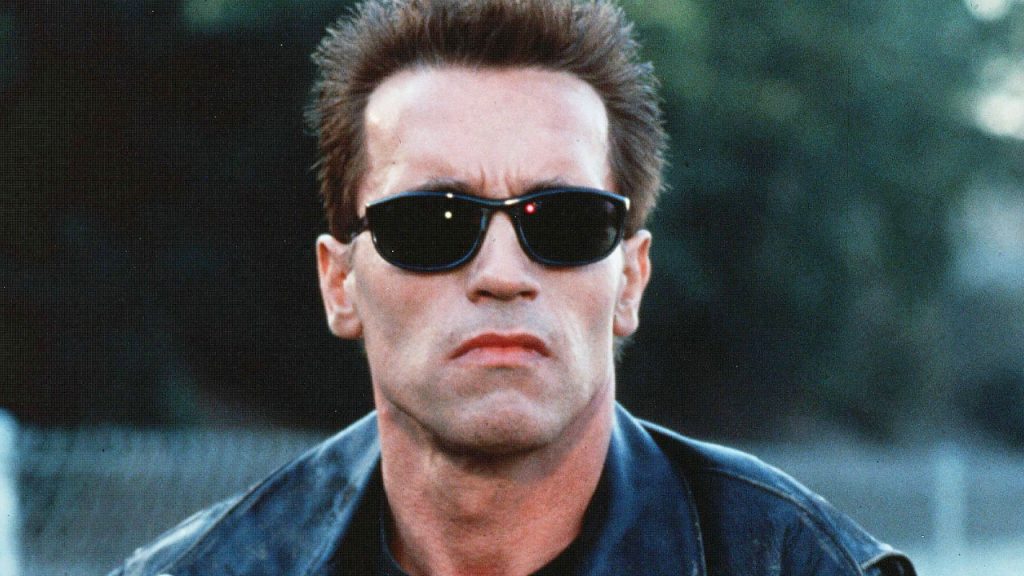

A major part of the study involved evaluating the security of the decentralized swarm. Because blockchain operates as a shared ledger and the training network used in the experiment was decentralized, the swarm showed resilience against conventional hacking attacks.

The researchers tested various scenarios involving "rogue" AI agents, some of which were programmed with outdated information or simple disruptive commands, while others were intentionally designed to undermine the network.

While the swarm was able to defend itself against less sophisticated agents, the study revealed a threshold beyond which smart rogue agents could potentially disrupt the collective intelligence of the system. If enough malicious agents infiltrated the swarm, they could eventually compromise its learning capabilities.

Although the research is still in its early stages and has only been conducted through simulations, the findings offer promising insights into the future of AI development. In particular, the ability to create decentralized AI swarms that are secure, resilient, and capable of maintaining data privacy could have far-reaching implications.

This technology could potentially be used to enable AI systems from different organizations or even countries to collaborate on complex projects without sacrificing data privacy or security. It might also play a key role in fields such as outer space exploration, where autonomous, decentralized systems could operate and learn independently in remote and unpredictable environments.

Ultimately, this research represents a pioneering step toward the development of decentralized, secure AI systems that could reshape how machines learn, collaborate, and function across industries.

What's Your Reaction?