Ex-OpenAI researcher predicts AGI by 2027

Aschenbrenner's essays focus on AGI, AI that matches or surpasses human abilities across various cognitive tasks.

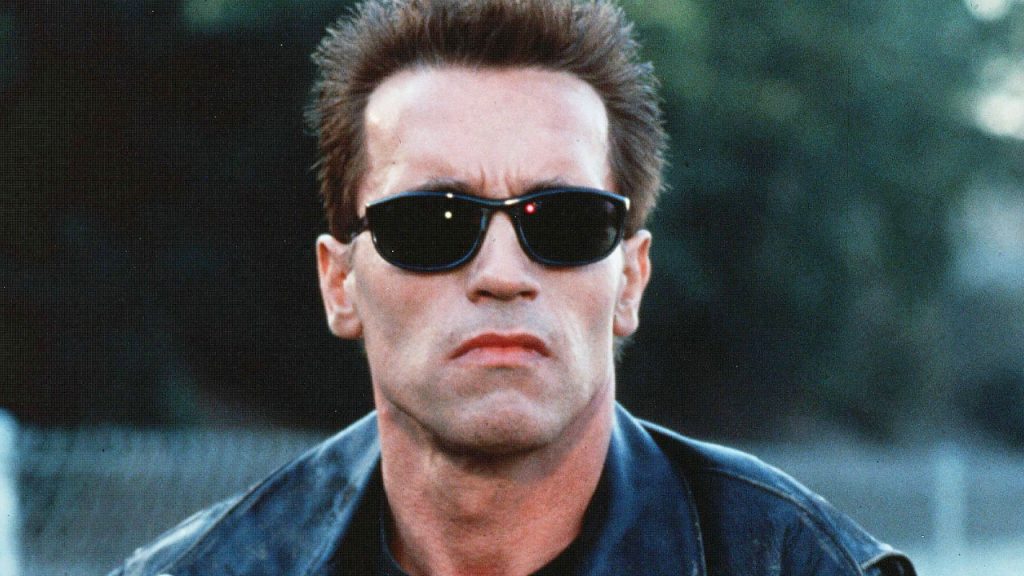

Leopold Aschenbrenner, formerly a safety researcher at OpenAI, has underscored the significance of artificial general intelligence (AGI) in his latest series of essays exploring artificial intelligence (AI).

Titled "Situational Awareness," this series delves into the current state of AI systems and their potential trajectory over the next decade. The comprehensive collection of essays spans 165 pages, compiled into a PDF file updated as of June 4th.

Aschenbrenner's focus within these essays lies predominantly on AGI, which represents a form of AI capable of matching or surpassing human capabilities across a diverse spectrum of cognitive tasks. AGI stands among various classifications of artificial intelligence, including artificial narrow intelligence (ANI) and artificial superintelligence (ASI). Aschenbrenner posits that AI systems have the potential to possess intellectual capabilities akin to those of a seasoned computer scientist. Furthermore, he ventures a bold prediction that AI laboratories will be able to train general-purpose language models within minutes by 2027.

In the triumph of AGI, Aschenbrenner implores the AI community to squarely confront its impending reality. According to his observations, the leading minds in the AI domain adhere to a perspective termed "AGI realism," grounded in three core principles associated with national security and AI advancement in the United States.

Recently, Aschenbrenner has embarked on establishing an investment firm concentrated on AGI ventures, garnering support from influential figures such as Stripe CEO Patrick Collison, as disclosed on his blog. This endeavor underscores his commitment to advancing AGI research and development, poised to shape the future landscape of artificial intelligence.

What's Your Reaction?